Model Farm User Guide

Overview

To accelerate evaluation AI model performance on target edge devices, APLUX builds the Model Farm. Model Farm contains hundreds of mainstream open-source models with different functions, optimized for different hardware platforms, and provides benchmark performance reference based on real testing. Developers can quickly finish evaluations according to their actual requirements without investing substantial costs and time costs.

At the same time, Model Farm also provides ready-to-run model inference example code, greatly reducing the difficulty and workload for developers to test model performance and develop AI application on edge devices, shortening the entire process time and accelerating solution deployment.

Features

Specifically, Model Farm can help developers accomplish the following:

- Query AI model performance reference on specific Qualcomm chip

- Download optimized AI models (leveraging NPU for acceleration inference)

- Download pre/post-processing and model inference example code

- View model conversion & optimization steps, which help developers to optimize their own fine-tuned models quickly

Models on Model Farm

Fine-tuned Models by User

Model Management Service (MMS)

APLUX provides Model Management Service, allowing developers to directly obtain model information and related code packages on edge devices by terminal instead of Model Farm website.

Quick Start

The following steps showed a AI model deployment on an edge device through Model Farm:

Additionally, we provide a specific example to help developers get started with Model Farm more quickly: Deploy YOLOv5s Model to Qualcomm QCS8550 through Model Farm

Preparation

Prepare Development Board

Models on Model Farm have been optimized for Qualcomm Dragonwing IoT chip platforms and have undergone performance testing on development boards using Qualcomm chips. Currently supported Qualcomm IoT chip include:

- Qualcomm Dragonwing QCS6490

- Qualcomm Dragonwing QCS8550

Preparing Development Tools & Activation

💡Note

If you are using APLUX provided development board, the development tools are already pre-installed and the environment is activated, so you can skip this step.

APLUX provides developers with an easy-to-use edge AI application development toolkit (AidLux SDK), accelerating end-to-end AI application development across different hardware platforms.

The related development kits provided by APLUX must be used in an activated environment. Activation is performed through the LMS component included in the AidLux SDK.

To support different development boards and environments, APLUX offers pre-installed development tools and activation services:

| Development Board | AidSDK Pre-installed | Activation |

|---|---|---|

| APLUX AidLux OS Dev Board | ✅ | ✅ |

| APLUX Linux Container Dev Board | ✅ | ✅ |

| Third-party Dev Boards | Contact APLUX for support | Contact APLUX for support |

- More information about AidLux SDK, see Development Kit

- The activation steps, refer to Activation Guide

💡Note

For third-party development boards, Model Farm can still provide optimized model files, and developers can develop applications by themselves. If developer need to use AidLux SDK, please contact APLUX for relevant software and installation guidance.

Prepare Developer Account

Developers can browse model information and performance on Model Farm without logging in.

Developers need to log in with APLUX developer account to download models and example code.

- Register as an APLUX developer

- Please visit: Developer Account Registration

- Fill in developer information according to the registration form prompts and requirements

- Submit the account creation request after the information is filled in correctly

Log in to Model Farm

Web Login

Please visit Model Farm

MMS Login

APLUX development board users can also download models through the Model Management Service (MMS) provided by AidLux SDK, with the following steps:

# Log in to Model Farm

$ mms login -u <developer account username> -p <developer account password>A successful login like below

Login successfully.View Models

Developers can search for models on Model Farm, understand detailed model information, and make quick assessments.

Method 1: View through web page

Developers can visit Model Farm through a browser to browse and view model details.

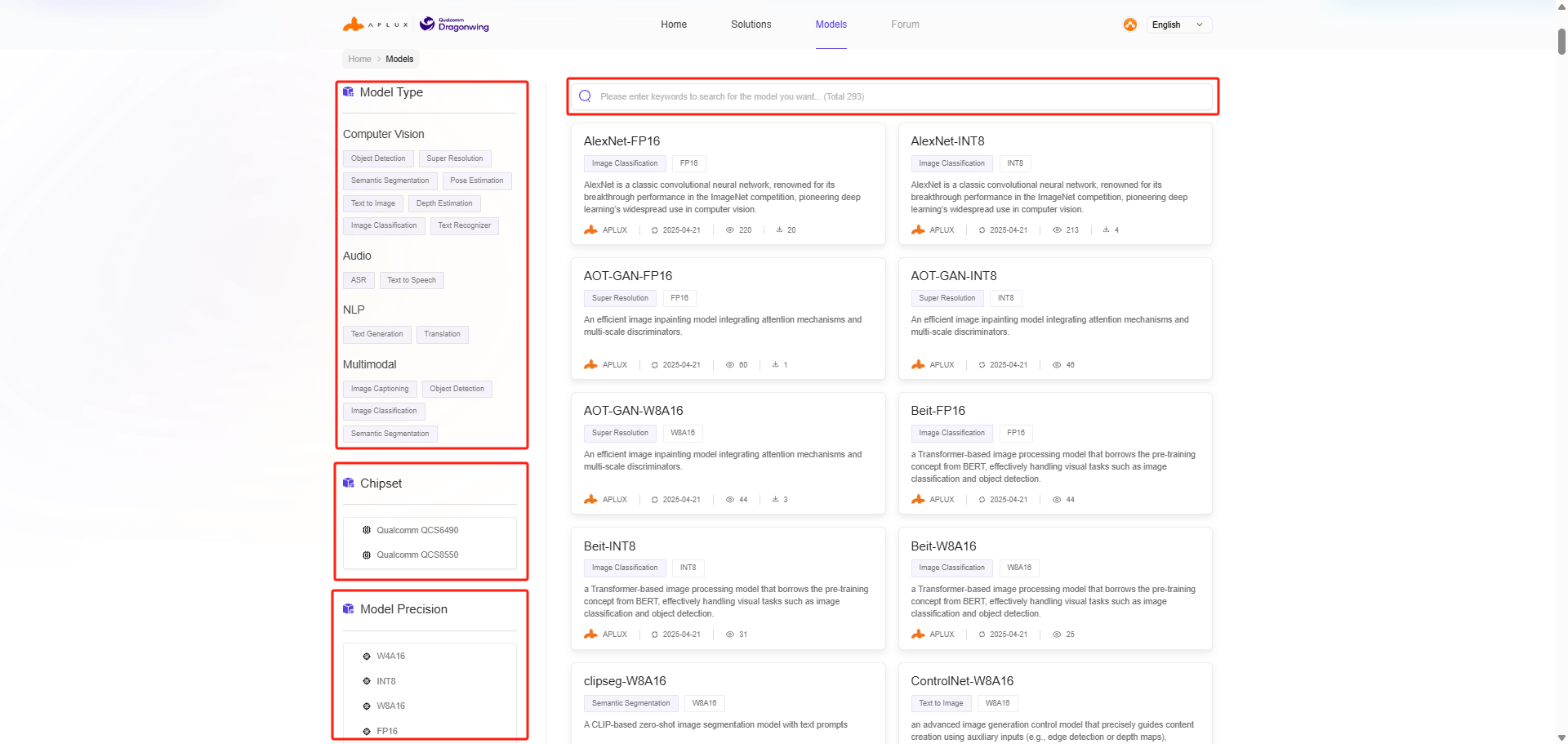

Model Farm provides multiple ways to filter and find models:

- Filter by model type

- Filter by model data precision

- Filter by chip type

- Keyword search

Method 2: View through MMS

In addition to browser access, Model Farm also supports developers obtain model information on Model Farm through the Model Management Service (MMS) provided by AidLux SDK on the development board.

💡Note

Please ensure AidLux SDK is ready

Command line usage example:

# List all models

$ mms list# Find models by model name

$ mms list yolo

Model Precision Chipset Backend

----- --------- ------- -------

YOLO-NAS-l FP16 Qualcomm QCS8550 QNN2.29

YOLO-NAS-l INT8 Qualcomm QCS6490 QNN2.29

YOLO-NAS-l INT8 Qualcomm QCS8550 QNN2.29

YOLO-NAS-l W8A16 Qualcomm QCS6490 QNN2.29

YOLO-NAS-l W8A16 Qualcomm QCS8550 QNN2.29

YOLO-NAS-m FP16 Qualcomm QCS8550 QNN2.29

YOLO-NAS-m INT8 Qualcomm QCS6490 QNN2.29

YOLO-NAS-m INT8 Qualcomm QCS8550 QNN2.29Download Models

Method 1: Download through web page

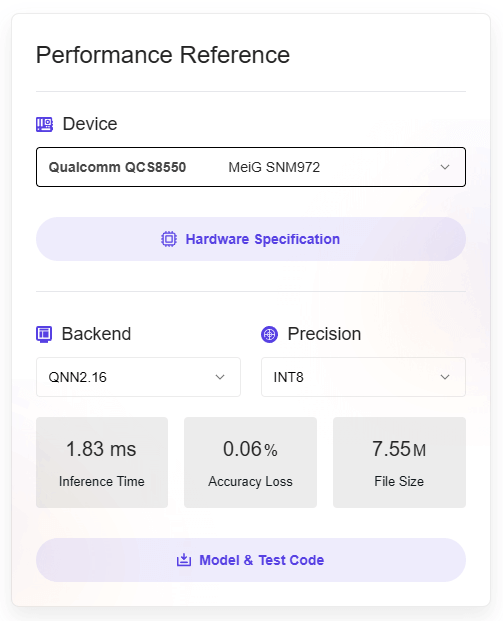

Click the button in the Performance Reference section on the model details page to download the model and code package.

Method 2: Download through MMS

After successfully logging in to Model Farm using the mms login command, developers can use the mms get command to download models and example code:

# Download yolov6l model with INT8 data precision, optimized for QCS8550, with QNN2.23 inference framework

$ mms get -m yolov6l -p int8 -c qcs8550 -b qnn2.23

Downloading model from https://aiot.aidlux.com to directory: /var/opt/modelfarm_models

Downloading [yolov6l_qcs8550_qnn2.23_int8_aidlite.zip] ... done! [40.45MB in 375ms; 81.51MB/s]

Download complete!For detailed usage instructions, please refer to the output of the mms get -h.

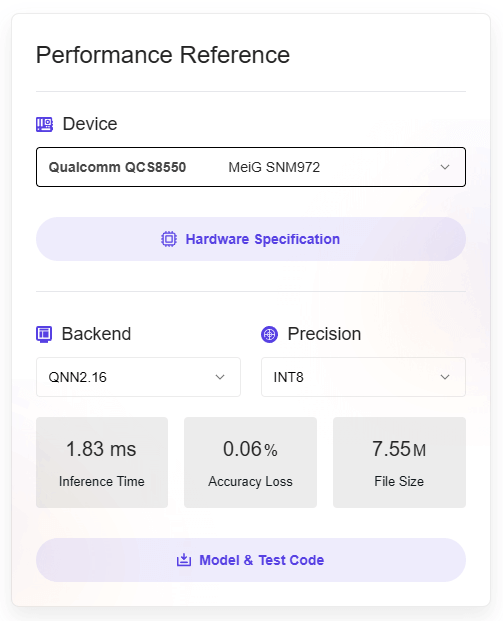

Model Performance Reference

The model details page on Model Farm provides AI model performance reference on corresponding devices.

- Device: Development board and corresponding chip used for model testing

- Backend: AI Framework and version used for model conversion and inference

- Precision: Precision of optimized model

- Inference Time: model inference time cost, excluding pre/post-processing

- Accuracy Loss: Cosine similarity of output matrices comparing the source model (FP32) with the optimized model

- Model Size: File size of the optimized model

💡Note

For the same SoC, model performance is only reference data for devices with different hardware specifications

Taking YOLOv5s on MeiG SNM972 (QCS8550) as an example:

Test Models

The models downloaded from Model Farm can be tested for inference using the following two methods:

Inference using APLUX AidLite

APLUX provides the AI Inference Framework (AidLite) for model inference by Qualcomm NPU.

AidLite SDK supports all models inference on Model Farm. Additionally, Model Farm also provides pre/post-processing code, ensuring developers can quickly view model effects.

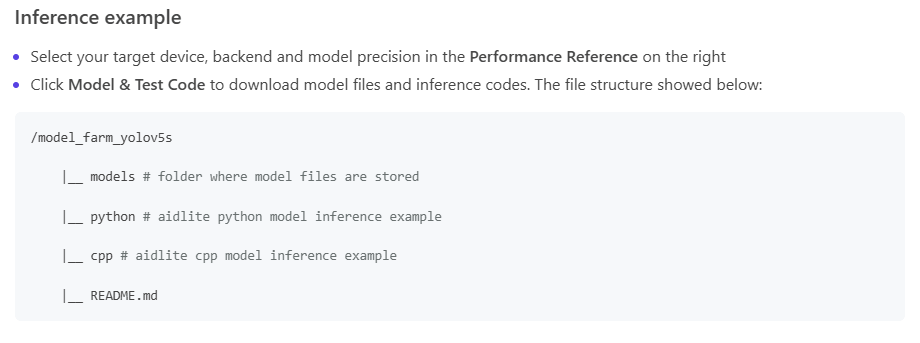

Through the Download Models step, developers can obtain a complete model file and inference code package with the following file structure (using YOLOv5s as an example):

/model_farm_yolov5s

|__ models # Model files

|__ python # Python-based model inference code

|__ cpp # C++-based model inference code

|__ README.md # Model information description & related software dependency installation guideRunning model test cases requires the following steps:

- Development board environment activation: Please refer to Prepare Development Board for activation

- Install AidLite and other software environment dependencies: Refer to

README.md - Run examples: Refer to

README.md

Inference using Qualcomm QNN

Please refer to the Qualcomm QNN Documentation

Convert Fine-tuned Models

APLUX provides the AI Model Optimization Platform (AIMO) for optimize model to Qualcomm AI framework.

The models on Model Farm are mostly optimized and exported from AIMO. On this condition, Model Farm not only provides already optimized models but also provides conversion reference steps for using AIMO.

Model conversion reference steps can be viewed in the following two places:

- In the Performance Reference section on the right side of the model details page, click Model Conversion Reference to access it.

- View in the Model Conversion Reference section in the

README.mdfile of the code package.

For an introduction and usage of AIMO, please refer to: Model Optimization Platform AIMO User Guide

Use Case

Deploy YOLOv5s

This example demonstrates how to deploy a YOLOv5s model to the Qualcomm QCS8550 using Model Farm.

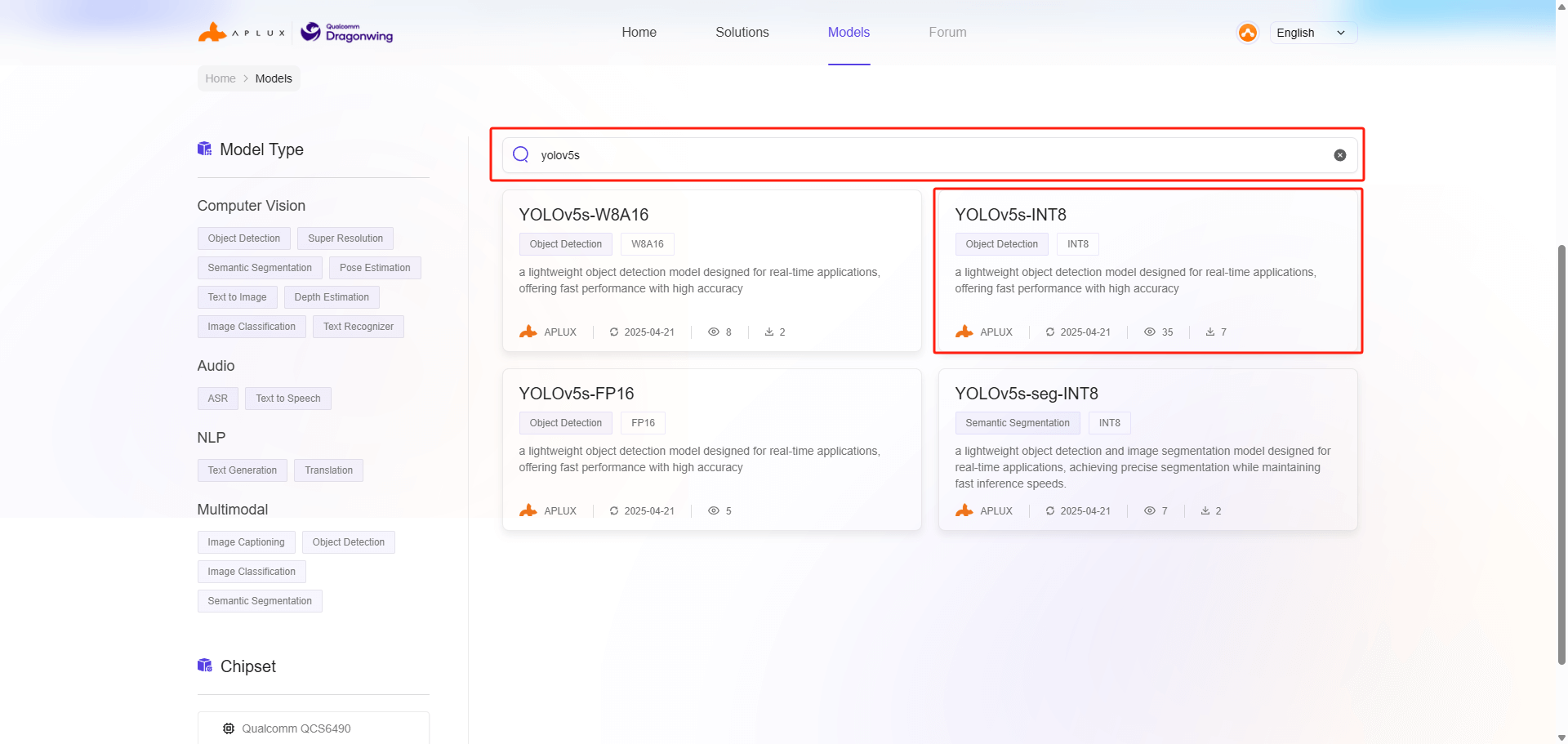

Step 1: Visit the website and find the model

Developers can directly access Model Farm through a web browser: https://aiot.aidlux.com/en/models

By keyword search for the YOLOv5s model in the search bar, developer can find different precision YOLOv5s models. For this case, we select INT8 quantization precision and click on the YOLOv5s-INT8 model card to view model details.

Step 2: View model performance

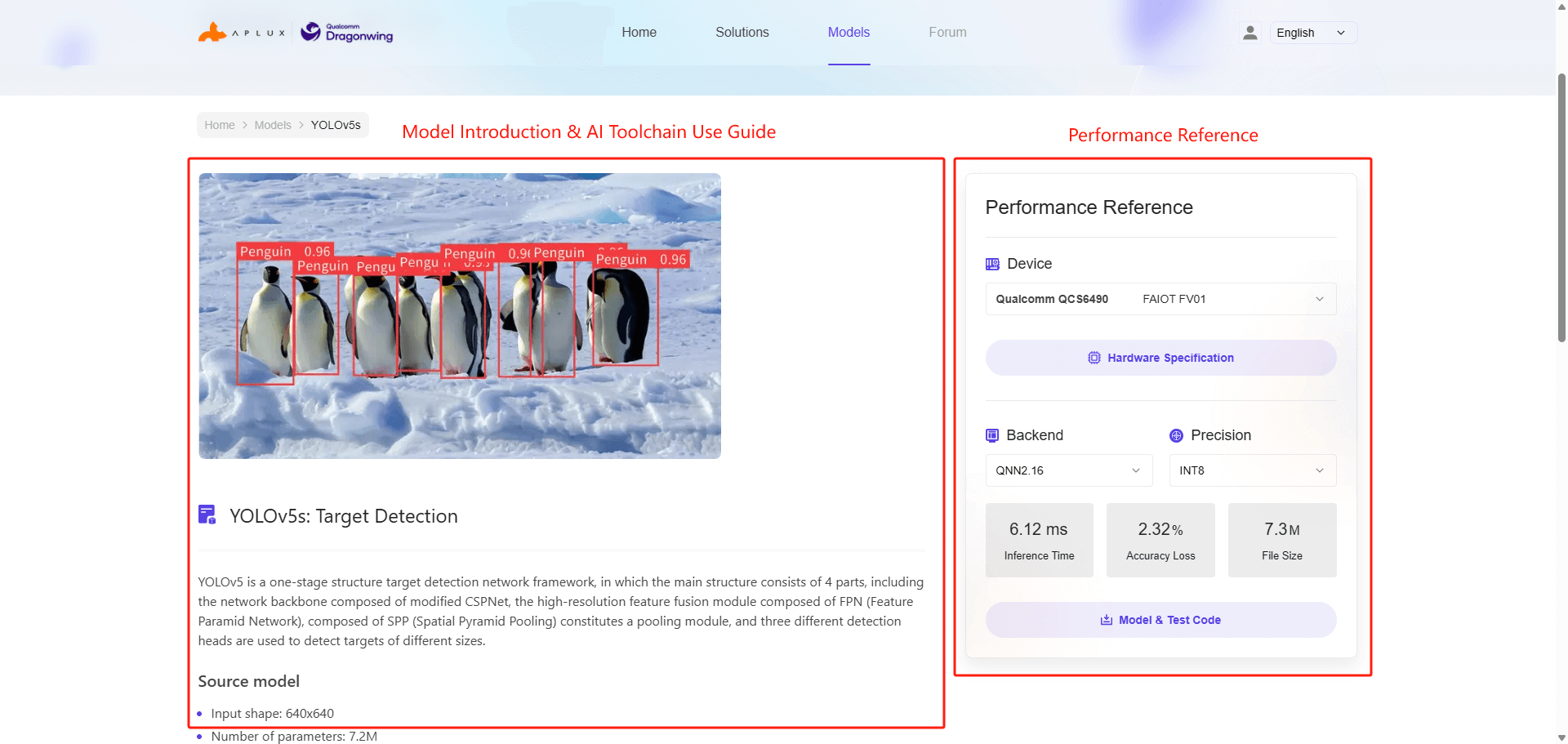

On the model details page, the left side showed model introduction and related tool guide, and the right side floating window displays the reference performance of the model on corresponding hardware

We choose the MeiG SNM972 device (QCS8550) as the reference hardware and can see the performance metrics of YOLOv5s with INT8 precision, as shown in the figure below:

💡Note

The inference time metric refers to the execution time of the model, excluding pre/post-processing code

Step 3: Download model and code package

Developers who want to obtain model files and inference code need to register as APLUX developers. For specific operations, please refer to: Prepare Developer Account and Log in to Model Farm

After logging in, click the Model & Code to download the model file and code package.

At the same time, we can also view the file structure and description of the code package in the Inference example section on the model details page, as shown in the figure below:

Step 4: Import model & code package and dependencies setup

When developers have prepared the hardware development board, they can import the downloaded code package into the development board environment. Please refer to the Preparation section for environment preparation.

Developers need to refer to the README.md file in the code package to install software dependencies for model inference. In this case, we only need to install AidLite SDK (QNN2.16 version). For introduction and usage of AidLite SDK, please refer to: AI Inference Framework (AidLite)

Note

The AidLite version and the QNN version have a one-to-one correspondence, you can check the QNN version in the Model Details->Performance Reference or README

sudo aid-pkg update

sudo aid-pkg install aidlite-sdk

# Install AidLite QNN2.16 version

sudo aid-pkg install aidlite-qnn216After installation, verify that AidLite SDK has been installed successfully

# aidlite sdk c++ check

python3 -c "import aidlite; print(aidlite.get_library_version())"

# aidlite sdk python check

python3 -c "import aidlite; print(aidlite.get_py_library_version())"Step 5: Run model test case

After software dependencies are installed, following the running steps in the README.md file, developers can go to the python folder and cpp folder in the code package to run the YOLOv5s case in different programming languages.

Step 6: Convert fine-tuned models and inference

When developers want to test the running effect of their fine-tuned YOLOv5s model on QCS8550, Model Farm provides reference steps for using AIMO to convert the YOLOv5s model.

AIMO model conversion reference steps can be viewed in the following two places:

- In the Performance Reference section on the right side of the model details page, click Model Conversion Reference to access it.

- View in the Model Conversion Reference section in the

README.mdfile of the code package.

Developers can refer to these steps to use AIMO to convert their own YOLOv5s model. For introduction and usage of AIMO, please refer to: Model Optimization Platform AIMO User Guide

After model conversion, developers can replace the YOLOv5s model file in the code package and run the test case again to check the effect.

💡Note

There may be differences between models, and test case cannot guarantee successful inference by just replacing the model. Developers need to adjust the pre/post-processing code for their own models

Deploy Qwen2.5-0.5B-Instruct

This example demonstrates how to deploy a Qwen2.5-0.5B-Instruct model to the Qualcomm QCS8550 using Model Farm.

For detailed steps, please refer to Deploying Qwen2.5-0.5B-Instruct on Qualcomm QCS8550